Understanding Kubernetes: Pods, Deployment, and Services Explained.

Table of contents

Introduction

Kubernetes is an open-source container orchestration engine for automating the deployment, scaling, and management of containerized applications. At the heart of Kubernetes lie the concepts of Pods, Deployment, and Services. Here In this blog, we will try to learn about the Pods, deployment and service and how it is different from Docker. Let's dive in!

Pods

Pods are the fundamental units of deployment in Kubernetes that we can create and manage in Kubernetes. We can also say that the pods is the definition of how to run a container. Pods provide a lightweight and scalable execution environment for containers. Pods can contain single or multiple container. In multiple container that are located in single pods can seamlessly communicate with each other over a shared network namespace and also share same memory resources.

Pods help is achieving the lightweight and scalable execution as When scaling a Pod, all the containers within it are scaled together as a unit, ensuring consistent behavior and maintaining the integrity of inter-container communication.

Pods is basically the wrapper around one or more container.

Its is similar like the running container in docker using

CLIwheras we run container in kubernetes by writingPOD YAMLfile

---Example creating a container using nginx image

Docker:docker run -d -p 8080:80 --name my-container nginx:latest

Kubernetes Pod YAML:

apiVersion: v1

kind: Pod

metadata:

name: my-pod

spec:

containers:

- name: my-container

image: nginx:latest

ports:

- containerPort: 80

and run this command kubectl apply -f pod.yaml

Although we bring some enterprise level with the help of pod still the major things are missing that make the k8s more powerful i.e Autohealing and AutoScaling. As the pod is somewhat similar to that of running specification written in the YAML manifest, we need the deployment.

Deployment

Deployment is a mechanism that will provide the efficient management of replicas and updates. Deployment helps us to achieve the auto-healing feature. So Kubernetes good practice will do not creating the pod directly but create the deployment which will create the pod in itself.

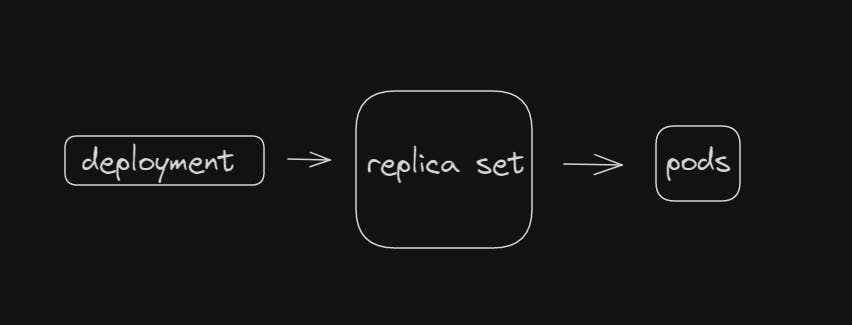

The ReplicaSet consists of Kubernetes controller that will ensure the required no of the controller as per the deployment YAML manifest.

The controller can be both default as well as custom controllers like Agro cd and Admission controller.

WORKFLOW :

We create a Deployment object in Kubernetes by defining its specifications in a Deployment YAML file including the desired state of the application, number of replicas, and any other relevant configuration.

When the Deployment is created or updated, Kubernetes creates a ReplicaSet based on the specified configuration. The ReplicaSet is responsible for ensuring that the desired number of replicas is always running.

The ReplicaSet starts creating and managing Pods. It creates new Pods based on the template specified in the Deployment.

If any unauthorized work leads to the deletion of the pod it will automatically create the new pod parallely.

EXAMPLE of Deployment YAML file :

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.14.2

ports:

- containerPort: 80

In other words, deployment is also an abstraction. We only have to create the deployment and it will create the replica set and pod for us itself.

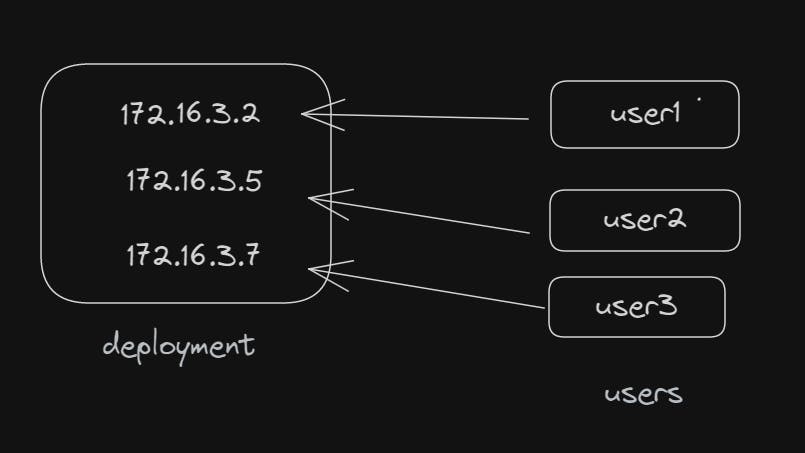

Now the Auto-healing feature is solved by creating deployment but there is one problem. Even though a new pod is created if any pod gets deleted it will have a different IP address than the old pod. That means even if the new pod is created the user cannot access the new pod as the new IP address is unknown which leads to the error in load balancing and other error.

For solving this problem SERVICE is used. Let's talk about service!

Service

Service helps in facilitating communication and load balancing within the cluster. Service also solves the auto-healing process. They provide a stable network endpoint for a set of Pods, enabling other components within the cluster to discover and access the Pods reliably.

When the new pod is created the user cannot get access to the new pod as it has a different IP address.

Here in the given case if the pod having the IP address 172.16.3.2 dies and another Pod is created having IP address 172.16.3.1 the end user cannot get access.

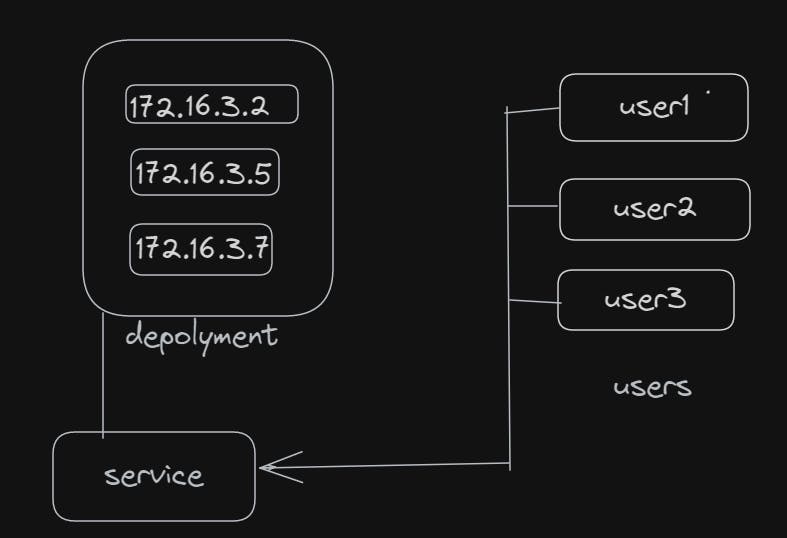

So to solve the problem, we will create the Service on the top of the deployment, and the user access the unique IP address and a DNS name assigned by Kubernetes. This IP address serves as a stable network endpoint for the Service, regardless of changes in the underlying Pods.

Now the service also acts as a load balancer using the Kubernetes component called kubeProxy.

But there is still a problem. Even if the user does not have the problem of accessing the SVC IP address. The service still needs to manually track the new and old IP addresses of pods which is not good.

So service used labels and selector for service discovery instead of the IP address as labels will be the same even if the IP address change as the replica set deploy the new pod with the same YAML manifest.

So in this way, the Service can easily keep track of the new pod and work as service discovery.

Another main feature of service is the ability to exposing to the outer world.

There are mainly three types of service.

Cluster IP

Node port

Load balancer

Cluster IP

This is the default type of service it will only allow someone who has access to the Kubernetes cluster.

Node port

With a NodePort Service, Kubernetes opens a port on all the cluster's nodes and forwards traffic from that port to the Service. This allows the organization access to the Service by accessing any of the cluster's nodes using the NodePort along with the node's IP address.

Load Balancer

When you create a Service of the type LoadBalancer, it will allow the external world to access the application. It will do this with the help of the Kubernetes underlying component called Cloud Control Manager (CCM). Suppose we have AWS as our cloud provider, Kubernetes API server will notify the EKS to give the ELB( Elastic Load Balancer ) IP address. The CCM will then generate the public address using AWS implementation Which can be used to access the application anywhere by using the internet.

Conclusion

In conclusion, Kubernetes Pods, Deployments, and Services are essential components for managing containerized applications. Pods provide an execution environment for containers, while Deployments enable efficient replica management and updates. Services facilitate communication and load balancing within the cluster. Together, they empower organizations to deploy, scale, and maintain applications effectively in a Kubernetes environment, streamlining the container orchestration process and ensuring optimal performance and availability.