Understanding AWS Cloud Front | Day 19

Introduction

CloudFront is a content delivery network (CDN) service provided by Amazon Web Services (AWS). We can set up the cloud front ahead of the S3 bucket or EC2 instance or any load balancer that will help us prevent the request directly to the origin server.

To understand the cloud front better we first should know what is CDN. CDN can be said as a geographically distributed server located at various places that will help to reduce the latency for the user even if it is situated a long distance from the main server.

How It Works

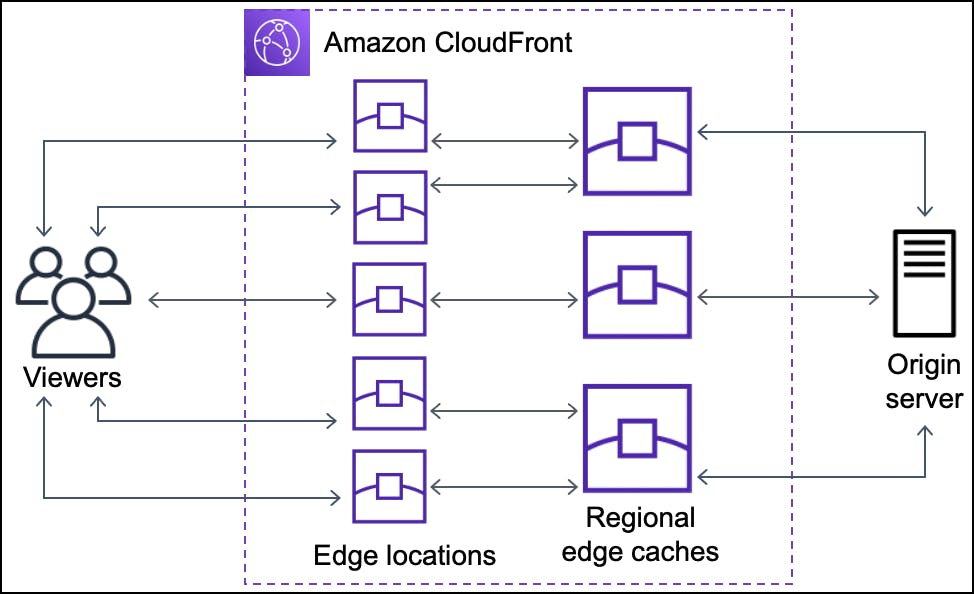

The Amazon CDN services known as Cloud Front act similar to that of CDN. They have many edge locations situated at various places where they store the required data as a cache and When the client requests the data instead of the request going to the main server which may be far away the request is handled by the edge server reducing the latency.

A user accesses our website or application and sends a request for an object, such as an image file or an HTML file, DNS routes the request to the CloudFront POP, and Cloud Front checks its cache and it is suited it sends back the response and if the Object is not there in the cache the cloud front forward the request to the main server and the main server respond back with the required data the cloud front also keep it in the cache for future.

Regional edge caches

The CloudFront also has regional edge caches that will store the object even if the content is not popular enough to stay at the edge location.

They’re located between our origin server and the edge location.

As we can see in the above figure if the content is not at the edge location then the regional edge location cache is checked before forwarding the request to the origin server. The size of the Cache of the regional edge cache is more in size than that of the edge location and it stores less popular content than that of the Edge Location.

Similar to that of the edge location if the content is not found in the regional edge location it forwards the request to the origin server and Cloud Front always keeps persistent connections with origin servers so objects are fetched from the origins as quickly as possible, then the content is also cached at the both the regional edge location as well as in the edge location.

NOTE:

A cache invalidation request removes an object from both POP caches and regional edge caches before it expires.

Proxy HTTP methods (

PUT,POST,PATCH,OPTIONS, andDELETE) go directly to the origin from the POPs and do not proxy through the regional edge caches.

Creating the CloudFront Distribution

We can use different services as an Origin Like S3 Bucket, EC2 instance, and Load Balancer.

S3 OBJECT

Let us Create an S3 Object and using CloudFront we will access it and restrict the public request to the S3 Bucket which will increase not only the efficiency but also the security.

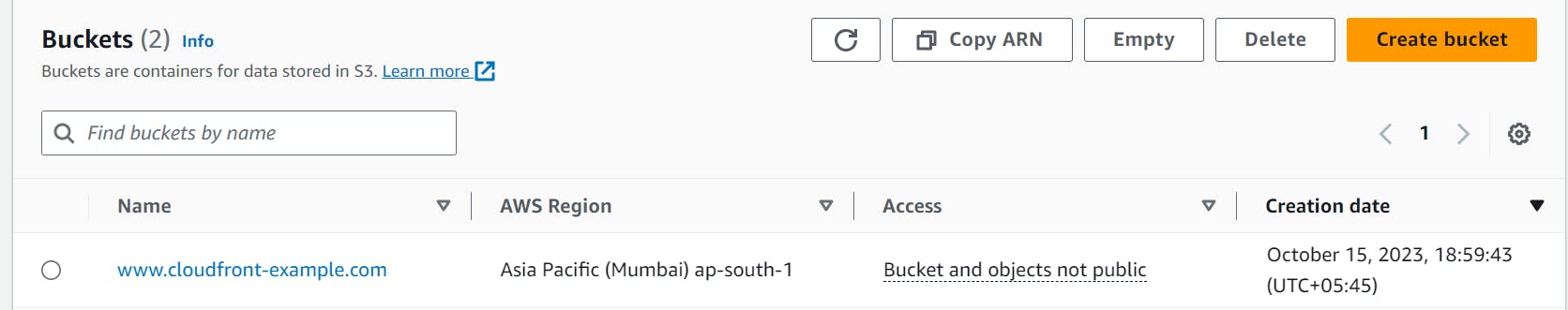

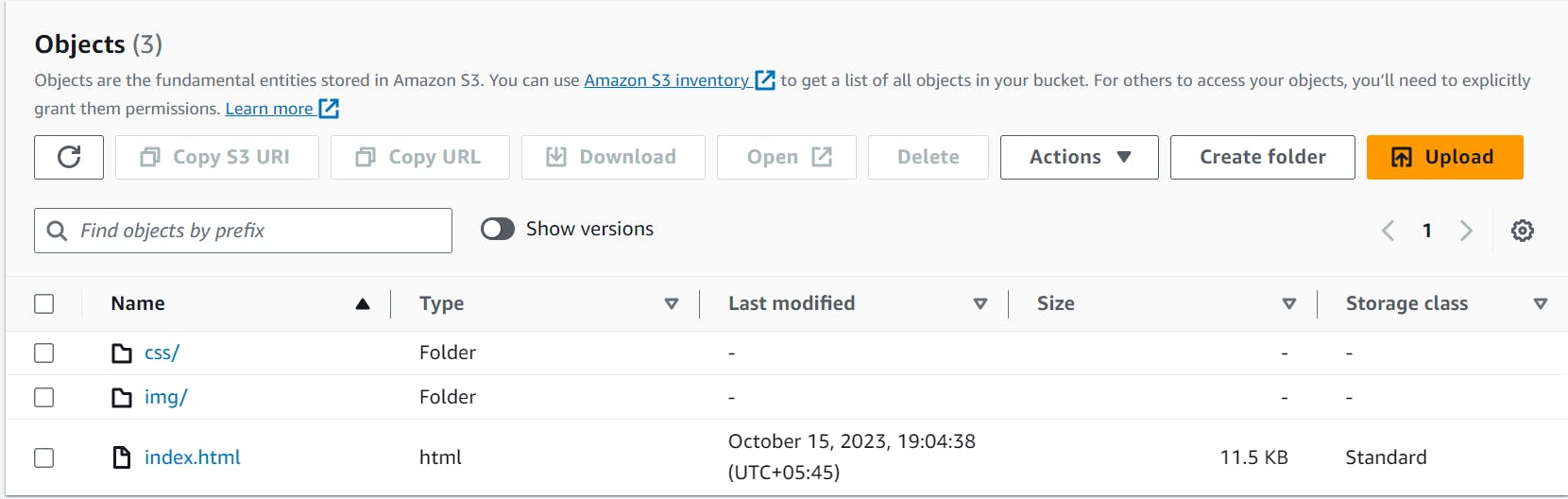

We have created the S3 bucket where we will host our static website.

We have uploaded our object in the S3 bucket disabling the public URl access.

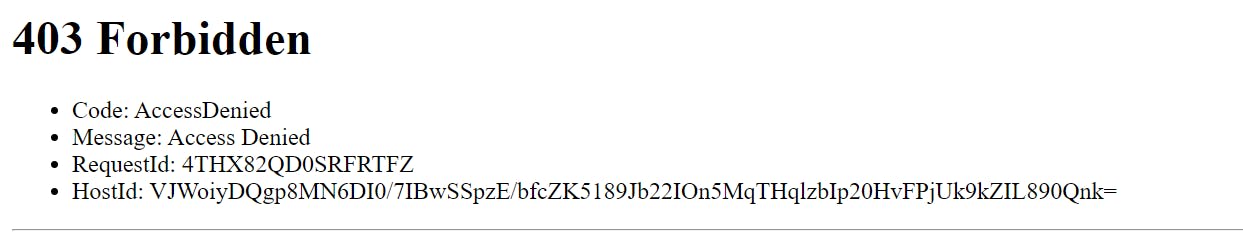

When we try to access with S3 Bucket through the S3 object URL the site will not be accessed as we have restricted the direct access.

Now will will access it using the Cloud Front where we will Set our S3 Object as the origin and create the Distribution where we have configured the OAI ( Origin Access Identity ) and will update the Bucket Policy to let access the object for the Cloud Front.

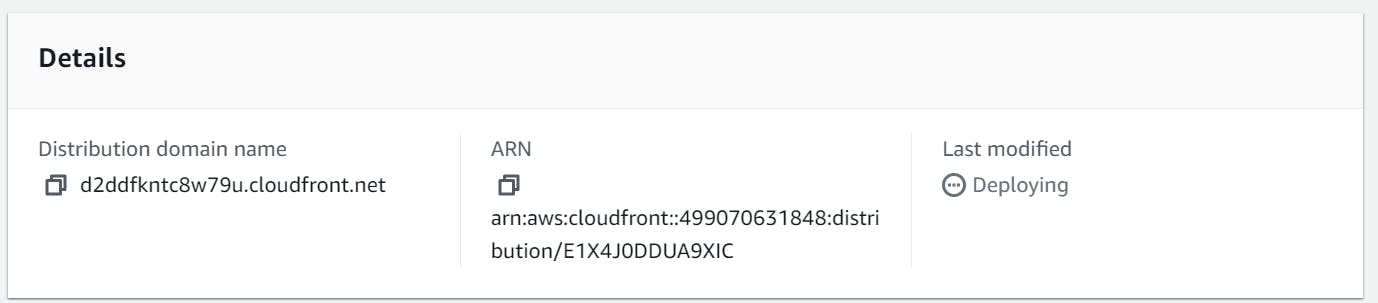

We have created the Distribution which is in the deploying state and it will take some time as the Cloud front have to configure the setting in the Various edge location around the world.

Now with the Cloud Front URL, we will be able to access our Static Website which is being hosted at the S3 Bucket.

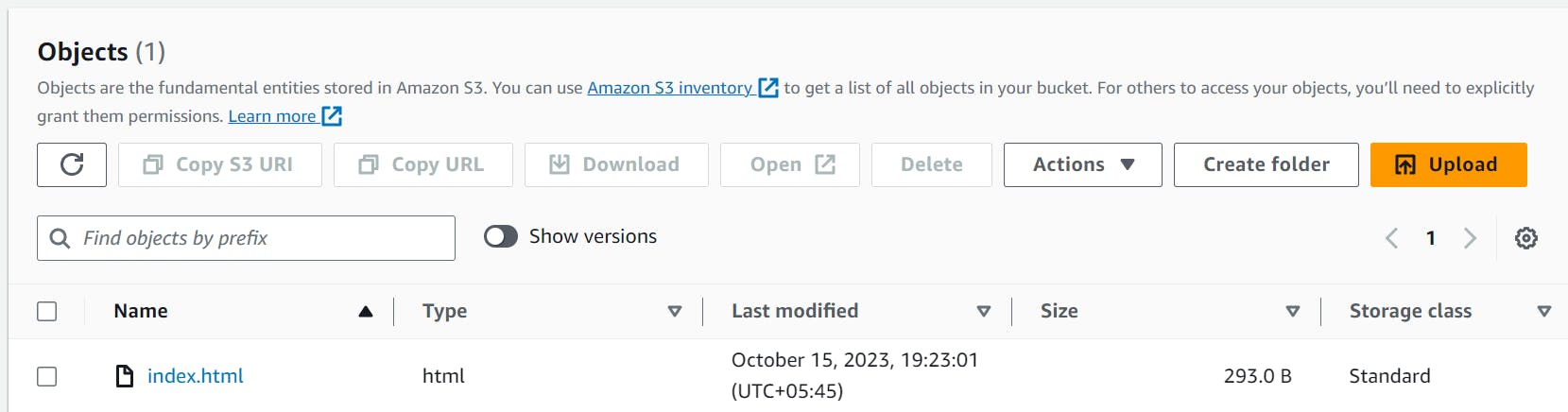

Case of Cache not being up to date with the origin content.

The case of the Stale Content can occur as the edge location can send back the request from the cache and the original content may have changed. For static content, the case is rare but for dynamic content, it can be the case. We can use various methods to solve the problem. Some of them are :

- Cache-Control Headers:

We use HTTP cache control headers in our origin server's responses. These headers include "Cache-Control" and "Expires." By setting appropriate values in these headers, we can control how CloudFront caches our content.

eg.

Cache-Control: max-age=3600In this way, we can Specify the maximum amount of time (in seconds) a resource can be cached. After this period, the cache should revalidate the content with the origin server.

2) Cache Invalidation:

- CloudFront supports cache invalidation, which allows you to manually or programmatically purge specific content from the cache.

Limiting the access of EC2 to only Cloud Front.

For the Case of the S3 Bucket, we can block all the public URL requests while creating the bucket itself but in the case of the EC2 instance, we will still be able to access the instance using the public IP address which we don't want.

For this case, we can update our security group inbound rule to not allow requests other than that from the cloud front IP Range. AWS itself manages the IP range list of that of CloudFront, we can use that IP range to set our security group inbound rule to only allow the request from that range. We can obtain the IP address range of CloudFront in Managed Prefix List In VPC.

In This way, we can only allow the Cloud Front to talk to the origin server i.e. ec2 instance.

[NEXT: ECR]